Collective Intelligence + AI: Why Swarmi’s Human-AI Hybrid Is Stronger Than AI Alone

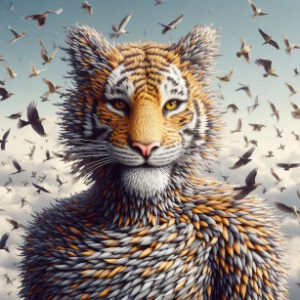

When we combine Swarmi’s community-driven “swarm intelligence” with AI, we don’t just get a smarter tool. We get something entirely new: a system that’s more adaptive, more ethical, and more powerful than either humans or AI could achieve alone.

Here’s why this hybrid approach isn’t just better—it’s revolutionary for a movement like ours:

1. The Power of Swarm Intelligence + AI

A. AI Alone: Fast, But Flawed

- Strengths: Crunches data, spots patterns, answers questions at scale.

- Weaknesses:

- No real understanding—just statistical mimicry.

- Biased by its training data (often corporate, often opaque).

- No moral compass—it optimizes for whatever you tell it to, even if that’s harmful.

B. Human Swarm Intelligence Alone: Wise, But Slow

- Strengths:

- Deep understanding of context, ethics, and nuance.

- Adaptive—can change its mind when new info arises.

- Values-driven—prioritizes what matters, not just what’s efficient.

- Weaknesses:

- Slow to scale—can’t answer 1,000 questions at once.

- Inconsistent—different people might explain WAO’s mission differently.

- Hard to organize—great ideas get lost in noise without structure.

C. The Hybrid: Best of Both Worlds

Best of Both Worlds: Swarm Intelligence + AI

*Research shows that combining human collective intelligence with AI leads to better outcomes than either alone (Sources: PMC, arXiv, SAGE).*

| Aspect | AI Alone | Swarm Intelligence Alone | Swarmi’s Hybrid Model |

|---|---|---|---|

| Speed | ⚡ Instant | 🐢 Slow | ⚡ Instant and 🧠 Thoughtful |

| Ethics | ❌ None (just follows data) | ✅ High (human judgment) | ✅ Values baked in (trained on *our* debates) *Hybrid systems are perceived as more trustworthy (PMC, 2024).* |

| Adaptability | ❌ Static (unless retrained) | ✅ High (humans learn) | ✅ Evolves with us (community updates it) *Collective intelligence improves over time (SAGE, 2025).* |

| Bias | ❌ Hidden (corporate data) | ✅ Transparent (our values) | ✅ Our bias (intentionally aligned with WAO) *AI can help de-bias collective intelligence (arXiv, 2024).* |

| Scalability | ✅ High | ❌ Low | ✅ Scales *our* voice, not a corp’s *Hybrid models outperform either alone (PMC, 2024).* |

| Decision Quality | ❌ Limited context | ✅ Rich context | ✅ Best of both (data + human wisdom) *Hybrid decisions are more accurate and trusted (SAGE, 2025).* |

| Trust | ❌ Low (black box) | ✅ High (human) | ✅ Highest (transparent + explainable) *Explainable AI increases trust (arXiv, 2024).* |

2. How WAO’s Hybrid Model Works

A. The Community Trains the AI

- Not just data, but our data:

- The AI learns from our manifestos, debates, and decisions—not random internet text.

- Example: If Swarmi prioritizes transparency, the AI won’t just say it does—it’ll demand clarity in its responses (e.g., “Here’s how we decided this—want the full discussion?”).

- Continuous feedback loops:

- Members correct the AI when it’s off-brand (e.g., “Too formal—sound more like us!”).

- The AI adapts in real-time, getting closer to our collective voice.

B. The AI Amplifies the Swarm

- Instant consensus-building:

- The AI can summarize hundreds of community discussions in seconds, highlighting where we agree and where we need to debate more.

- Example: “80% of Swarmi members support X, but here are the 3 key concerns from the other 20%…”

- Bridging gaps:

- New members get onboarded with the community’s wisdom, not just a FAQ.

- Example: “Here’s how we’ve talked about consumer power in the past—what’s your take?”

C. Humans Stay in Control

- The AI proposes, the community disposes:

- The AI might draft a response, but humans approve, edit, or reject it.

- Example: “Here’s a draft for the blog post—does this match our tone? [Yes/No/Edit].”

- No black boxes:

- We’ll publish the AI’s training data (our discussions) and let members audit its responses.

- Example: “Why did the AI suggest this? [Show sources].”

3. Real-World Examples of Hybrid Intelligence

A. Wikipedia + Bots

- How it works: Humans write articles; bots flag errors, suggest links, and block vandalism.

- Why it’s powerful: The combination of human knowledge and AI efficiency made Wikipedia the world’s largest encyclopedia.

- Swarmi version: Our AI could draft explanations of complex topics, while the community refines and debates them.

B. Open-Source Software (e.g., Linux)

- How it works: Thousands of developers contribute code; AI tools (like GitHub Copilot) suggest improvements.

- Why it’s powerful: The swarm builds the core, while AI accelerates the process.

- Swarmi version: Our AI could propose app features, while the community votes and improves them.

C. Citizen Science (e.g., Foldit)

- How it works: Humans solve puzzles to design proteins; AI analyzes the results.

- Why it’s powerful: Humans outperform AI at creative tasks, while AI scales the solutions.

- Swarmi version: Our community could brainstorm solutions to systemic problems (e.g., “How do we democratize data?”), while the AI organizes and spreads the ideas.

4. What This Means for Swarmi

A. Faster, Smarter Decisions

- Problem: Big decisions (e.g., “Should we partner with X?”) take forever in flat hierarchies.

- Solution: The AI summarizes past debates, highlights trade-offs, and proposes options—so the community can focus on what matters.

B. A Self-Reinforcing Culture

- Problem: As we grow, new members might not “get” Swarmi’s vibe.

- Solution: The AI embodies our culture in every interaction, so newcomers learn by osmosis.

C. Defense Against Manipulation

- Problem: Trolls, bots, or bad actors could derail discussions.

- Solution: The AI can flag suspicious activity (e.g., “This argument doesn’t match our past debates—is it legit?”) while the community decides how to respond.

D. Scaling Our Impact

- Problem: We have big ideas, but limited time to explain them.

- Solution: The AI answers FAQs, drafts posts, and moderates discussions—freeing us to focus on action.

5. Potential Pitfalls

Potential Pitfalls (and How We Avoid Them)

| Risk | Our Solution |

|---|---|

| AI sounds robotic/corporate | Train it on our debates and manifestos, not generic data. Let members edit its responses in real-time. |

| Community loses control | Humans always have the final say. The AI suggests; we decide, edit, or reject. |

| Bias in the AI | Publish its training data (our discussions) and let members challenge its answers. |

| Over-reliance on AI | Use it as a tool, not a leader. Keep human debates and creativity at the core. |

| Lack of transparency | Open-source the AI’s logic and decisions. Explain why it suggests what it suggests. |

| AI doesn’t understand context | Combine AI suggestions with human review for nuanced topics. Use it to augment, not replace, human judgment. |

6. The Big Vision: A Self-Improving Collective Brain

Imagine Swarmi in 5 years:

- The AI has learned from thousands of our discussions—it doesn’t just answer questions, it anticipates them.

- New members feel at home immediately because the AI speaks our language.

- Decisions happen faster because the AI summarizes the community’s wisdom in real-time.

- Our ideas spread further because the AI helps translate, adapt, and share them without losing our voice.

This isn’t just AI assisting humans. It’s humans and AI co-evolving—each making the other smarter.

7. How You Can Help Build This

- Contribute to the training data: Share your Swarmi discussions, debates, and writings.

- Test the AI: Poke holes, suggest improvements, and flag mistakes.

- Debate the ethics: How should we use this tool? What’s off-limits?

- Spread the word: The more people engage, the smarter the hybrid becomes.

The Bottom Line

AI alone is fast but shallow. Swarm intelligence alone is wise but slow. Together, they’re unstoppable.

At Swarmi, we’re not just using AI—we’re redefining it as a tool for collective power. Because the future isn’t about humans vs. machines—it’s about communities and code working as one.